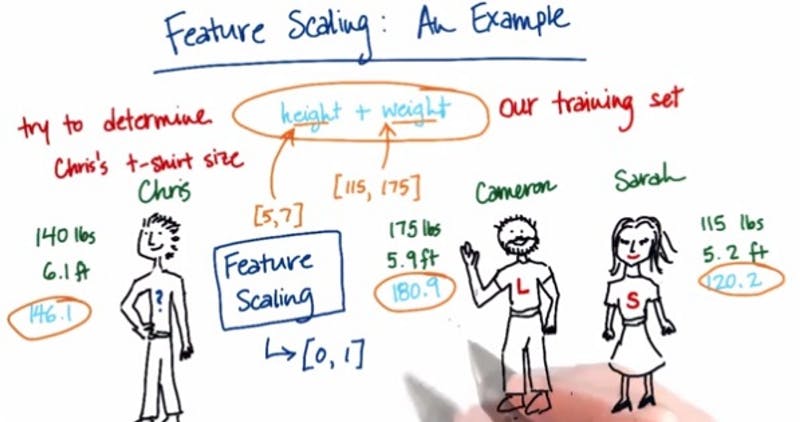

Feature scaling is an important technique in Machine Learning and it is one of the most important steps during the preprocessing of data before creating a machine learning model. This can make a difference between a weak machine learning model and a strong one. They two most important scaling techniques is Standardization and Normalization.

Normalization: Normalization is the process of rescaling one or more attributes to the range of 0 to 1. This means that the largest value for each attribute is 1 and the smallest value is 0.

Standardization: typically means rescales data to have a mean of 0 and a standard deviation of 1 (unit variance).

A good reason to perform features scaling is to ensure one feature doesn't dominate others.

Why and Where to Apply Feature Scaling?

The Real World dataset includes features that highly vary in magnitudes, units, and range. Normalization should be executed when the scale of a feature is pointless or misleading and not should Normalise when the scale is meaningful.

Some algorithms which use Euclidean Distance measure are receptive to Magnitudes. Feature scaling also helps to weigh all the features equally. If a feature in the dataset is big in scale compared to others then in algorithms where Euclidean distance is measured this big scaled feature becomes dominating and needs to be normalized.

Examples of Algorithms where Feature Scaling matters

K-Means

K-Nearest-Neighbours

- Principal Component Analysis (PCA)

- Gradient Descent

In Short, any Algorithm which is Not Distance based is Not affected by Feature Scaling.

Min-Max Normalisation: This technique re-scales a feature with a real value between 0 and 1.

Example 1

Example2

If an algorithm is not using feature scaling method then it can consider the value 4000 meters to be greater than 6 km but that's actually not true and in this case, the algorithm will give wrong predictions. So, we use Feature Scaling to bring all values to the same magnitudes and thus, tackle this issue.

Scaling vs. Normalization: What's the difference?

One of the reasons that it's easy to get confused between scaling and normalization is because the terms are sometimes used interchangeably and, to make it even more confusing, they are very similar! In both cases, you're transforming the values of numeric variables so that the transformed data points have specific helpful properties. The difference is that, in scaling, you're changing the range of your data while in normalization you're changing the shape of the distribution of your data.

Let's talk a little more in-depth about each of these options.

Method of Feature scaling

- StandardScaler

- MinMaxScaler

- RobustScaler

- Normalizer

STANDARD SCALER

Standard scaler is one of the most used features scaling method and it assumes your data is normally distributed within each feature and will scale them such that the distribution is now centered around 0, with a standard deviation of 1.

Let's take a look at it in action:

Import packages

import pandas as pd

import numpy as np

from sklearn import preprocessing

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

matplotlib.style.use('ggplot')

We are going to develop a custom data using numpy

np.random.seed(1)

df = pd.DataFrame({

'x1': np.random.normal(0, 2, 10000),

'x2': np.random.normal(5, 3, 10000),

'x3': np.random.normal(-5, 5, 10000)

})

Perform feature scaling

scaler = preprocessing.StandardScaler()

scaled_df = scaler.fit_transform(df)

scaled_df = pd.DataFrame(scaled_df, columns=['x1', 'x2', 'x3'])

Plot using Matplotlib

fig, (ax1, ax2) = plt.subplots(ncols=2, figsize=(6, 5))

ax1.set_title('Before Scaling')

sns.kdeplot(df['x1'], ax=ax1)

sns.kdeplot(df['x2'], ax=ax1)

sns.kdeplot(df['x3'], ax=ax1)

ax2.set_title('After Standard Scaler')

sns.kdeplot(scaled_df['x1'], ax=ax2)

sns.kdeplot(scaled_df['x2'], ax=ax2)

sns.kdeplot(scaled_df['x3'], ax=ax2)

plt.show()

Let's see a graphical representation of our features before and after scaling.

Right here you can see that no feature dominate each other. Feature scaling enables positive model accuracy.

With this point of mine, i am sure i have been able to convict you and not confuse you that feature scaling is important and necessary in building a successful model😊

Recommended articles medium.com/r/?url=https%3A%2F%2Fwww.geeksfo..